Marc Loftus: Hi, I'm Marc Loftus, editor of Post Magazine, and I'd like to welcome you to a second in a series of three podcasts sponsored by Dell Technologies. Again, I'm joined by two professionals with expertise in the space. First, I'd like to welcome back Alex Timbs, who heads up business development and alliances for media and entertainment at Dell Technologies. Welcome, Alex.

Alex Timbs: Hi, Marc. Thanks for having me again.

ML: Also joining us again is Jason Lohrey, the CTO of Arcitecta which has created its own comprehensive data management platform called Mediaflux. Welcome, Jason.

Jason Lohrey: Thanks, Marc. Good to be back also.

ML: Great having you guys back. So not long ago in our first episode, which was titled The Era of Data Orchestration, we defined some of the problems that the media and entertainment space is facing. In today's podcast, episode two, we're going to look at the rise of metadata, global namespaces and orchestrated workflows. One of the first things we probably would want to talk about are some of those terms that we're referring to for this podcast. One of them being metadata. A lot of us were already familiar with metadata. It's information about information, really. And I guess we're describing it as the foundation of everything on which decisions are made. So that's what we're keeping in mind in the case of global namespaces which we're thinking of as heterogeneous enterprise wide access to this said metadata. And when we talk about orchestration, we're looking at as the process of making insightful real time decisions and bringing the correct data together to the right resource in as automated a manner as possible. Those are just some of the definitions that we're keeping in mind as we're addressing this topic. And I guess it all refers to the creative pipeline. Jason, this is something you've touched on with in your article. Without a pipeline, there is no creativity and you cannot create without a pipeline. Is that something maybe we want to start off with and I don't know, Jason, if you want to take it from here, Alex, if you want to jump in.

JL: I'll jump in. There's always a tension between the production of art and artists, artist's creative processes, and bringing some structure to that. But in a contemporary content production pipeline, it involves a lot of people. We need both of those to be working optimally. So you really can't, in this day and age, produce a product, an artful, creative product without a good pipeline and good processes. And of course, without those processes, as I say, there'd be no product. So our focus is not to optimise the creative process per se, but to optimise the processes that happen in the background, automating as much as possible, and remove those processes from view so that the creative process jumps to the fore. What do you think, Alex?

AT: Yeah, I think that the creative pipeline is so important because we've got multiple people, as Jason's hinted at, that are all working towards a common goal. If you're a single artist, maybe the pipeline is not so important. You're an individual. You have your own processes in a way. You still have your own pipeline that you're following, but you don't necessarily collaborate with others. But in a modern workflow, you're collaborating with hundreds, if not thousands of other people. And in digital art creation, regardless what form it takes with multiple people, collaborating or contributing, technology is no longer just a tool, but forms the canvas on which the art is taking form. So that could be thought of as anything from the Wacom the artist is using to draw with, but it could also be consideration for the automation itself. And the reason for this is that the technology must be what supports reduced creative friction in this collaborative process. And just like a traditional artist drawing or an oil painting on canvas, the artist will never accept the tools getting in the way of that creative expression. So it's not dissimilar in the digital world, but when we talk about the pipeline being critically important, we're talking about automation, reducing that creative friction and allowing humans to do higher levels of work rather than focussing on repetitive or mundane tasks. So we want them to come up the stack, the creative stack, and not do stuff that isn't really expressing creativity and is just a process that we could otherwise automate.

ML: I'm always curious as to where that automation comes into play. When you're looking at a production that has many people within one facility, working with many other facilities, that have different artists and specialists within it, what does that mean for one facility automation, getting content to the next link in the chain and readying files for them to accept it in the format that they're expecting it to be in? Is it like, okay, it's quality controlled and now you're getting it the way you should be receiving it. Is it more than a delivery aspect when it comes to automation? Where does automation come into making a pipeline more efficient? You did touch on it by saying not having the artists deal with this minutia that maybe they don't need to deal with.

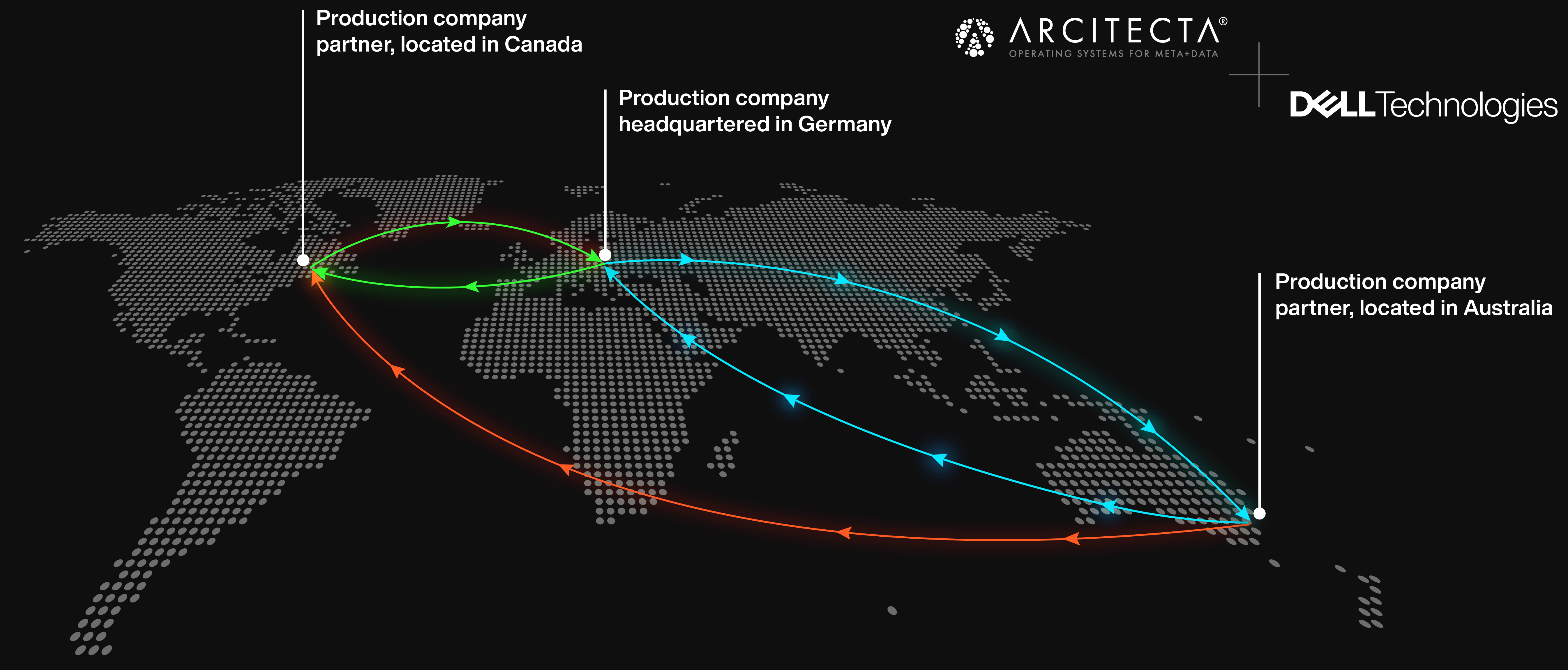

AT: It should be happening at every single level and opportunity. So an example of automation could be using an AI based rotoscoping tool where a mask could be generated to maybe 90% effectiveness, allowing the artist to focus more on compositing the shot rather than tracing out an image. This is obviously a better use of their time and helps the creative process become more efficient. But in a non-linear workflow where you're always trying to place the creator at the centre, we want to increase the efficincy of outputs of lots of people and processes. So it's very similar in terms of the goal to software development, continuous integration and agility, where small and frequent iterations are what really propels that storytelling forward. So this automation, more broadly, could be a simple proxy generation process where you want to create a different version of it to review in a theatre. It could be automatic scene building prepared for when an artist starts work in the morning to launch their 3D application or applications, that is an automated process. They simply click on the department there in, the version or the shot they're working on, and that will bring in all of those assets and build that scene so they can start working really rapidly, rather than them manually spending time building that scene and bringing all those assets in. So that's a really clear way to improve efficiency. Ultimately this is, in this new world, particularly important where we have geo-distributed processes and people, this can be all the way through to the movement, protection, deprecation, and status management of data as it moves through the pipeline. The pipeline is no longer thought of as something that exists within the brick walls of a building where everyone's in there together, the pipeline is global. So these automation processes have needed to become a lot more complex, a lot more agile, and a lot more performant.

JL: And of course, as you transcend organisational boundaries, or maybe its the same organisation, as you move around the globe, you're now dealing with the speed of light and the people in different time zones. So we need to be able to hand off from one site to another, and you need to keep track of where everything is across that global space. Automation might not be so obvious. It may well be that I need to access a particular bit of content, and as a consequence of doing that, contextually, the system needs to automatically transmit associated content to me so that the next time I go to access it, it's accessible with low latency. We've always had a requirement for automation in single sites, but as we start to expand to multiple sites, that becomes even more pressing.

ML: Good points. Alex, you highlighted having these deliverables ready for the next person in line when they come in the next morning or even using A.I. when it comes to rotoscoping. I know that some of the manufacturers that make those tools such as the Adobe's and a like are building capabilities into their product releases to simplify it a little bit so that time is better spent elsewhere and not on the more mundane stuff. I guess now, how are Dell and Arcitecta working together, or even independently, to address some of these needs that come up in a pipeline like this, where automation can help these multipoint workflows?

JL: Well, the first thing is we are working together. We've collectively realised that you need the conjunction of software and hardware, each with their strengths, to solve this problem on a global scale.

AT: Yeah, I completely agree with that. At Dell, we bring to the table proven, leading scale out storage. We've been a leader in the media space for a long time. Arcitecta, in this more modern world where we've got these geo distributed pipelines adds a layer of insight and orchestration capability that ultimately supports rapid decision making, advanced automation, and a much wider horizon of workflow options. All in a validated solution. Essentially, we're better together.

ML: Jason, can you explain how Arcitecta fits into the workflow. For example, “I'm a facility that has my own storage and I'm working with other facilities that have their own storage”. Maybe it is Dell, maybe it's other manufacturers solutions. Where does Arcitecta come in from an installation or a licencing, what is the model there? How do you actually take advantage of it if you're a facility that doesn't already have it?

JL: So there are two ways to do this. We can sit off to the side, that is out of band, and we can scan any kind of storage that presents as file, object, tape, etc. But the best place for us to be positioned is in band where we are the file system, where Mediaflux is in the data path, because we can see every change the millisecond that it happens. So someone saves a file and changes the state of it, we can be transmitting it from one place to another. In other words, it gives us an opportunity to parallelise the data pipeline. A really good example of that is you've got two sites, a geo-distributed workflow. Someone changes the state into a certain point in their process at site A. The moment we see that, each change could be transmitted to site B. Whereas traditionally you would wait until the entire process is completed at site A and then transmit everything you need. So that's a sequential process that when we're in the pipeline, in the data path, we can orchestrate these workflows the moment a trigger occurs. So really, Marc, optimally, we are in the data path, but people often start out by putting us to the side and we can scan and start managing data so we can check in, check out, once we've got some visibility of the data, we can then put these processes on top of, and we can reproject that data out through the front door. So even though we're scanned on an external file system or some sort of storage device, we can reproject that through another protocol elsewhere.

ML: To find that your customers are using your solutions on a project base as opposed to a perennial type of licence, meaning they're working with such and such studios in this scenario on this project, but six months ago, or six months from now, they're going to be working with somebody else and the needs may change. So is it a project by project licencing agreement? Is that how how it's deployed?

JL: Generally what happens is people either purchase software and it's perpetual licence or they obtain an annual licence. In terms of project by project basis, sometimes people would like to increase the number of licences when they've got active projects and decrease those in quiet periods. Others have continuous work going, so it just becomes a steady state. One of the other advantages of automation which we should touch on is the fact that it gives us a way to be repeatable. Everything's audited. We know who did what, when, things are forensically reconstructable. And to do that, you need to be able to identify everybody in the pipeline. So when we are in the data path, each person is an active participant in the system, in the fabric, and with that they consume a licence. So the model we have is based on concurrent user. We don't care how much data people have, they can have as much as they like. We're really matching ourselves to the scale of an organisation. And of course we want to add value. So if we're adding value then people will use our software more and therefore consume licenses. So I think we're matching this up so that it's a win-win for us and the customer.

AL: I don't know whether I've raised it, but certainly in my career at Animal Logic, there were some really good examples of the importance of making things atomic and tracking dependency. So the scale of that is is quite impressive, looking at the Lego films. For example, the Lego brick library known as LDD Tool, was used to make the models in three dimensional space. Animal Logic were tracking down to the individual studs on each brick. You might ask, “why would you want to go to that atomic level?” Well, because the director might decide that he wants to change the use of that brick type and swap it out throughout the entire film to a different type. Or the Lego Corporation may decide that they no longer what Brick A used in that film. So do you then have to get every artist to recreate every single shot, every single frame of the film? Or do you just click a button and it automatically swaps that brick out? You'd certainly want the latter. And that was a really good example of atomic automation, where you want to break things down into the smallest possible parts. That same sort of principle is extendable more broadly across pipelines and a range of tools. But because it means that there are so many files, elements, and so much information about that data required to feasibly use this, you need a very advanced system, essentially a database.

Improving the quality and efficiency of these pipelines is probably less about new ideas and more about the dynamics and the connections and reducing the friction between them because the human element within these pipelines makes every single pipeline distinct. So reducing friction supports creativity and allows for a better outcome. To achieve that, businesses typically have had this single silo of storage and made that data as fast and available as possible for all these individuals, particularly in non-linear pipelines. This is where every department collaborates; every output from a department is an input for another. We see a lot more of that in universal scene description (USD) pipelines. But now, because of the geo-distributed nature of production, we've had to move from having this single silo of storage behind a firewall to a scenario where we bring the data to the people. That data could be small hubs or another business that's a satellite office. It could be a single freelancer that you're only giving access to for a week, and you need to be able to deliver the right data or assets to them to work on in a timely fashion in their home, on a machine that you may not even manage or own.

JL: It's all metadata, of course, isn't it?

AT: Yeah, absolutely. And realistically, network file servers, for the longest time, have been one of the most potent collaboration tools, and they still are. They're the most aligned tool for media creation workflows. Why? Because essentially they're a form of a database with the tree. Humans are able to processes and share that information on collaboration storage but the limitations of that are such that you won’t be able to go to the granular level. And so you really need to turn to really high performance databases like Mediaflux to automate processes and to track that level of granularity.

JL: Well actually, if you look at a file system, we've traditionally embedded metadata in the file system by using the directory structure to give us some context and we will name files in a certain way and with multiple bits of metadata stuck together to give us some context. But it only gives you one degree of freedom. You've only got one way to slice that. But in a complex environment, we need to be looking at this entire data space with lots of slices and different perspectives from different people. So we can't rely on just the simple directory tree structure and file naming to encapsulate all of the metadata we need to drive these complex processes. So that's exactly why you need the intersection of a file system and database. When those merge, you can have complex metadata that draws your pipelines, data pipelines, and you can use as much metadata as you like.

ML: So we've talked about metadata here. We've talked about automation. We've talked about workflows. How about global namespaces? That's something we haven't specifically targeted here. Is that something, Jason, you'd like to comment on?

JL: Yes. Thanks, Marc. A global namespace exists in two places. At a single site, we can have a namespace that spans all the storage and presents that as a single mount point hides the details away. But increasingly, the term global namespace refers to geo-distributed workflows and geo-distributed systems. And when you distribute systems around the globe, you're then subject to the laws of physics. That is the speed of light. And it's just not possible to instantaneously move data from one site to another or to all sites at the same time. It takes time and effort. It also costs something, we've got to actually transmit over internet fabrics that we pay for. So we need to make sure we only send the right data at the right time. And that may actually mean that we transmit metadata ahead of actually sending the underlying data so that someone knows what's available, and they pull that through on demand. Or due to a process or some process automation pipeline, we say these 50,000 items need to be transmitted and that happens automatically, but not this other 150,000 items. So we need to embed the use of metadata in our globally distributed pipelines to make sure we're just sending exactly what's needed at the right time. That way we can push the cost down.

ML: That's a great point because you're talking about tons and tons of media here, huge file sizes. Obviously, when it comes to graphics, animation and even camera footage, you don't want to be pushing stuff around that doesn't need to be moved and obviously it's more efficient to only be sending the stuff that you need to send. So knowing that through the metadata and kind of getting in front of it is a good concept.

JL: Yes. And moreover, you don't want individuals to have to make a decision every time they need to send something. That process should be as fluid and as frictionless as possible, hence the need to automate based on context, and that context is provided by the metadata.

AT: All of this is fantastic, but the individual still needs to understand their connections and the outcomes that they want to achieve. So there's no magic bullet, there's no button you can push, and it does it all for you. That's one thing that keeps coming up

JL: That’s right. An enterprise needs to put some effort in to achieve these outcomes. You can't just magically have it happen. I've always found in the data space that if you lift the conversation that means everybody's conversation gets lifted and people need to concentrate on the business process rather than the mechanics of moving data from one system to another. They now need to think about when do they want to move that? Why do they want to move it? And under what conditions do they move it? These considerations extend to the complete pipeline and automation. So people need to put the effort in to be able to answer those questions. But we're providing systems that enable them to ask those questions and then come up with an answer.

AT: Exactly. It's allowing that thinking to come up the stack Marc. And so they're not focussing, they're not on the front line fighting fires. It allows them to actually come up the stack and focus on the actual business outcomes they want to achieve. But that can be quite confronting for a lot of customers because they haven't had to do that. So they don't actually know the right questions to as

ML: Well, guys, I want to thank you again for participating in this podcast. The Rise of Metadata, Global Namespaces and Orchestrated Workflows. I'd like to have you back for our third episode where we're going to discuss some of the cybersecurity issues that studios are facing. And I think that'll be an interesting conversation as well. So hopefully we can connect in a couple of days.

To read the accopmanying article “The Rise of Metadata, Global Namespaces and Orchestrated Workflows” written by Jason Lohrey, the CTO of Arcitecta, visit this link.