What is big data?

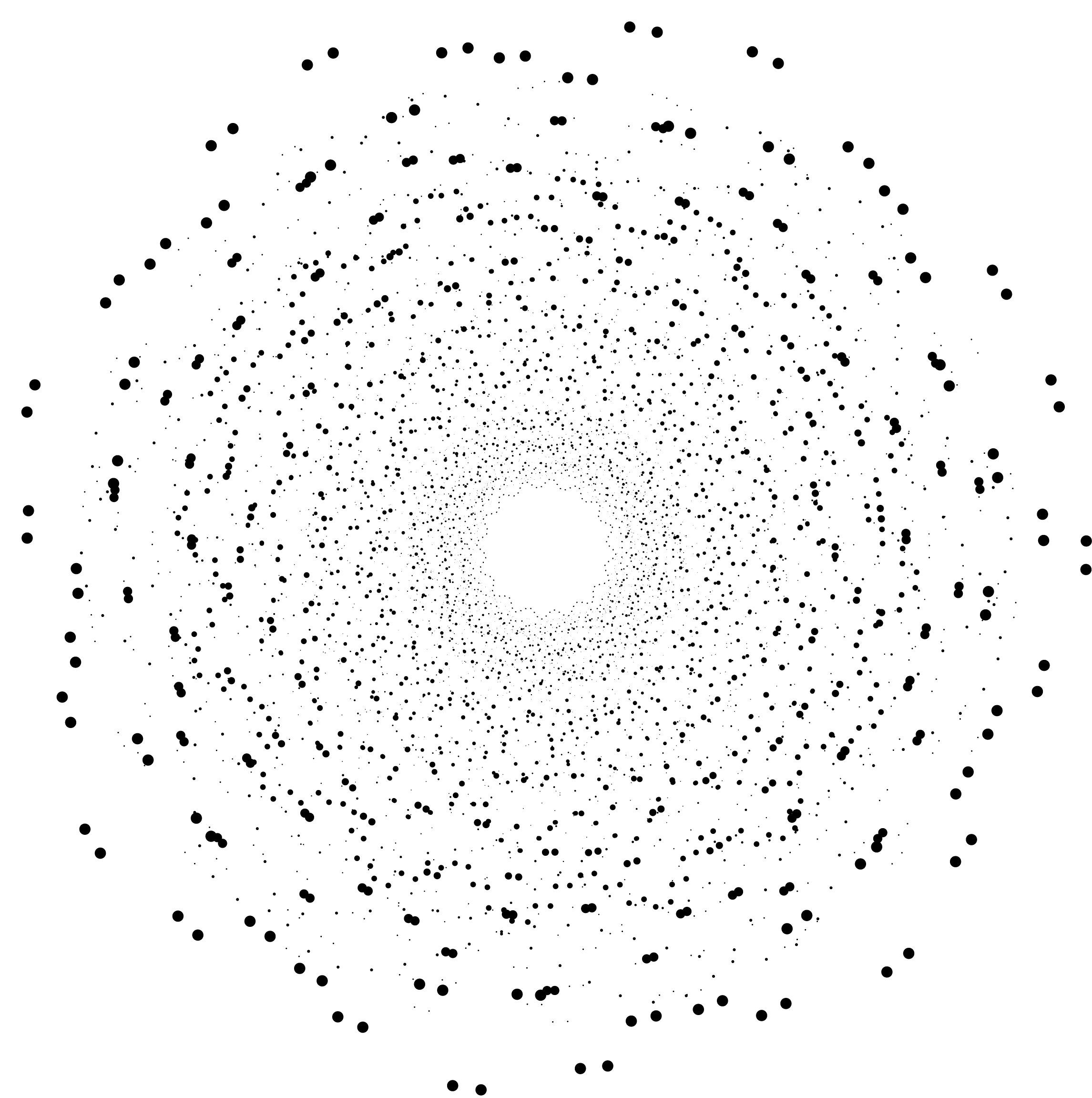

Big data refers to the large volume of structured and unstructured data that is typically generated by science instrumentation and cameras; medical equipment, genomic sequences, and multi-dimensional capture devices. You might recall the image of a Black Hole that was recently captured by the Event Horizon Telescope. That image data was lots of files spread across 5 petabytes stored on about half a ton of hard drives. The equivalent of about 1.39 billion copies of David Bowie’s “Space Oddity”. That’s big data.

At the same time, as instruments advance, you also might hear someone talking about big data in reference to the size of an individual file. For example, a file that came off of a genome sequencer that is a terabyte in size. This is also big data.

Source: Event Horizon Telescope Collaboration et al.

Big data for the common good

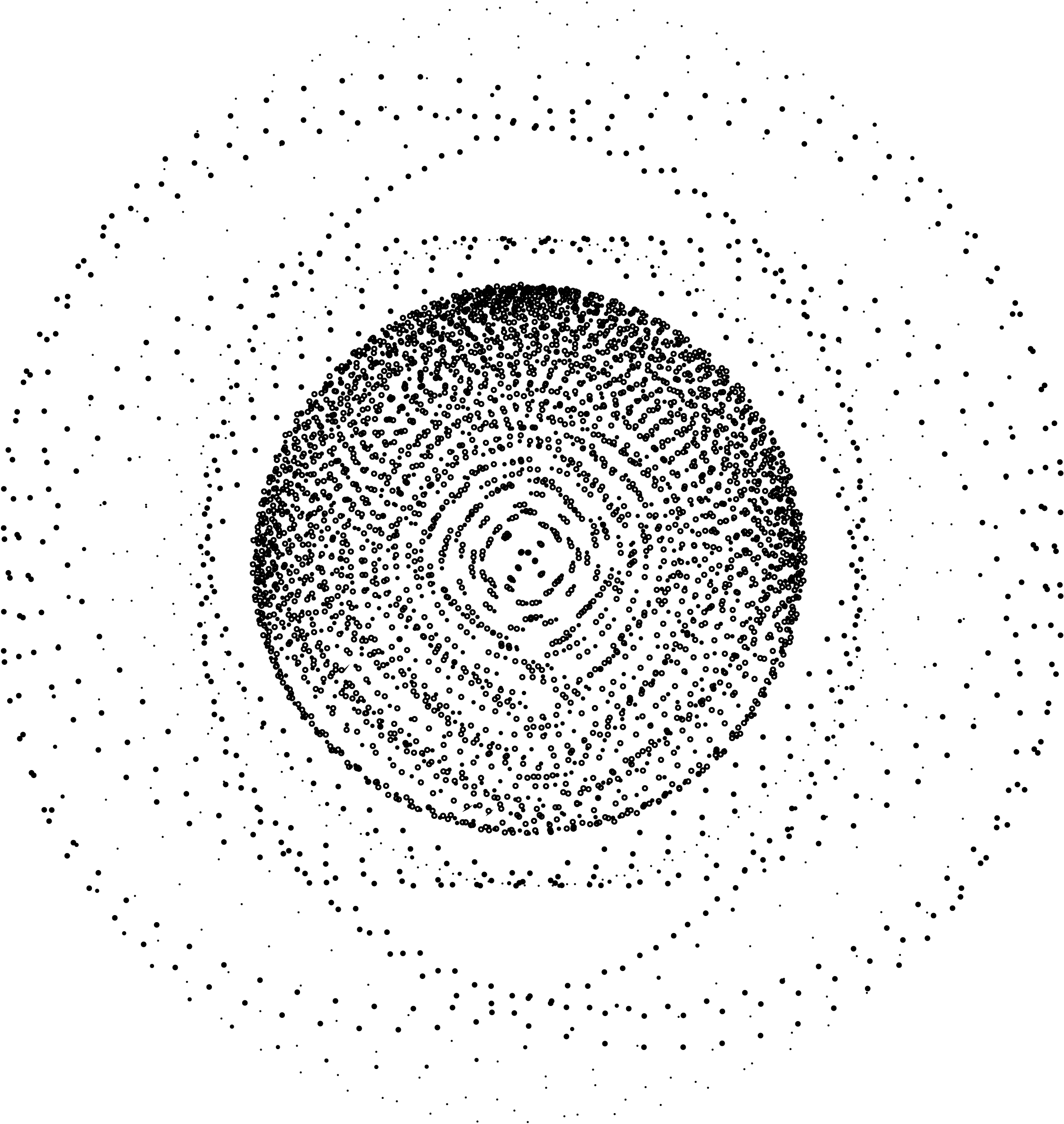

Science is undergoing a renaissance in the “data-age”. Data-driven science is proving its power to detect undiscovered correlations, find new solutions to health and disease management, environmental resilience and more.

Reports suggest that some researchers now account for 5TB each with some of the largest universities having more than 1,000 such people. To put this into perspective, the 2009 3D film Avatar generated about 1 petabyte of data (1000 terabytes), at the time this was more data than any other movie in history.

Unlike the movie industry, however, research funding is increasingly contingent on long-term data storage. To move into a petabyte institution requires cost-effective, innovative forms of information processing that enable enhanced insight, decision making, and process automation.

How can we help take your university or research institution into the data age?

We are the experts in delivering expertise and systems for life science computing at petabyte scale. Our Mediaflux platform delivers an automated research data management system to curate, ingest and tag scientific data with metadata to allow greater findability, improved research and re-using of data, and improved accessibility whether the data is held in primary storage or deep archive.