As artificial intelligence (AI) advances, the power requirements to train and run AI models are increasing at an astonishing rate. The high-performance GPUs powering AI workloads require massive amounts of electricity, and as AI adoption grows, data centers are scrambling to keep up with the demand. Ten years ago, a GPU in an HPC environment was mainly deployed to increase the perceived number of Floating-Point Operations (FLOPS) the system could achieve, boosting its rating on the HPC TOP500. With the advent of NVIDIA’s Compute Unified Device Architecture (CUDA), GPUs are now easier to use than ever — and the AI disruption is well on its way. The limiting factors of AI are further compounded by the need for vast amounts of data storage, much of which resides on power-hungry spinning disk drives. However, a simple shift in data storage strategy — moving infrequently accessed AI data from disk to tape — can free power for higher-value tasks and save millions in energy costs.

The Energy Footprint of AI

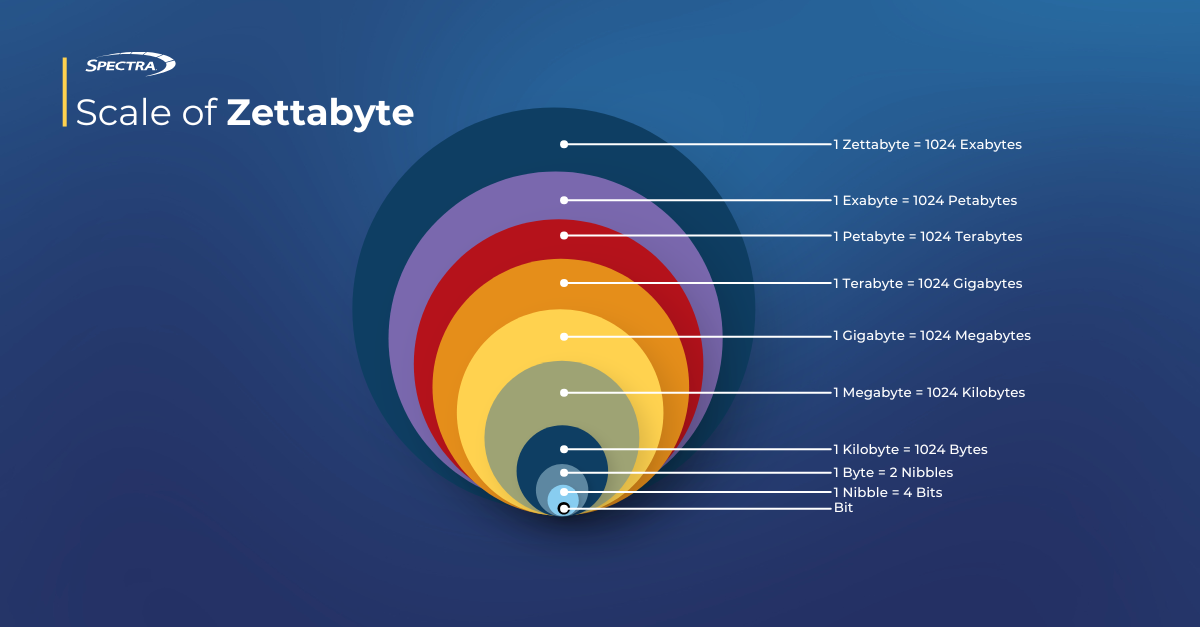

AI workloads, particularly in machine learning and deep learning, require specialized hardware, such as NVIDIA’s H100 and upcoming GB200 GPUs. In 2023 alone, an estimated 3.76 million AI GPUs were shipped, consuming approximately 14.3 terawatt-hours (TWh) of electricity annually. By 2026, AI chip shipments are projected to surpass 9 million units shipped, pushing power consumption past 137 TWh per year — equivalent to the energy use of more than 10 million households. Each new generation of GPUs increases in Floating Point Operations (FLOPS) performance — sometimes, even doubling. But they also require more power. The number of transistors per chip has been steadily climbing year over year. For example, the release of the latest NVIDIA GB200 has a staggering 1,200-watt power footprint. A rack of GB200 like NVL72, can consume 120 kW. Beyond compute power, AI data centers also require storage capacity — and a lot of it. Traditionally, the lion’s share of data was stored on spinning hard disk drives (HDDs), technology that constantly consumes power whether data is being accessed or not. A single exabyte (EB) of data stored on disk consumes around 11 gigawatt-hours (GWh) per year, whereas the same amount of data stored on magnetic tape uses only 26 MWh per year — a staggering difference of nearly three orders of magnitude in power efficiency. While a large language model can be stored on hundreds of terabytes, AI models based on rich media such as imaging, self-driving vehicles, and video trainings are exponentially larger. For instance, a digital X-ray consumes up to 20 MB. In comparison, PET scans are 500 MB, and CT and MRI scans can balloon to 1 GB. Each of these types of images continue to increase in resolution consuming more storage. In a single medical practice, such storage capacities may be manageable. But how many lung X-rays will it take to train a model to detect lung cancer? (Hint: a lot.) AI’s sophistication hinges on the amount of high-quality data it can process.

Unlock Massive Power Savings by Moving Data to Tape

Globally, there was an estimated 147,000 EB of stored data in 2024, which many believe is exaggerated, with low estimates at 8-20 ZB stored. By transitioning just 20% of this 147,000 EB of data from disk to tape, data centers could claw back 323,024 gigawatt-hours (GWh) per year. Moving just 1 EB of data from disk to tape can save nearly 11 GWh per year and save about $1.8 million dollars in power cost at $0.165 per kilowatt hour. To put this in perspective, assuming 147,000 EB of stored data: To support the estimated 5 million GPUs that will sell in 2024, we will need 74,000 GWh of power. Moving just 4.58% of the world’s stored data from disk to tape would recoup 74,000 GWh annually. Moving 20% saves 323,024 GWh annually. This is enough energy to power tens of millions of homes or repurpose power for additional AI resources.

The Cost Savings from Power Reduction

Electricity costs for data centers vary, but at an average of $0.165 USD per kWh, the savings from transitioning 20% of the 147,000 EB of data stored to tape equate to $53.3 billion per year in reduced energy costs. Even if only a portion of this saved power is repurposed for AI workloads, it represents a major financial and sustainability win. Let’s assume that only 50% of the recouped power is reused, leaving the other half as direct cost savings: 161,512 GWh saved (from 20% data moved to tape) $26.6 billion saved annually The remaining power could still support 5 million additional AI GPUs coming in 2025 without the need to expand grid capacity

A Sustainable AI Future

As AI adoption continues to accelerate, data centers will face growing pressure to optimize power usage. While deploying more efficient AI hardware is one piece of the puzzle, the hidden energy drain of inefficient storage is an overlooked challenge. By leveraging tape storage for cold data, organizations can not only reduce their carbon footprint but also free up power for AI workloads without the need for costly infrastructure expansions.

Conclusion

AI is poised to reshape industries, but its energy appetite must be managed responsibly. Moving data from high-power spinning disks to energy-efficient tape archives is a practical, immediate solution that benefits both the environment and the bottom line. Organizations that embrace this shift will be able to scale AI capabilities sustainably while slashing operational costs — a win-win for businesses and the planet. You don’t have to be at the exabyte scale to reap the benefits of tape. 100 PB of data moved from disk to tape saves $180,000 per year. The tape storage pays for itself in matter of years just in its power saving alone. Some data centers will simply not have enough power available to meet AI’s power consumption. By moving cold data to a medium that consumes less power while continuing to meet the needed service level for performance, organizations can adjust their mission to meet the challenges of an AI world.

Are you ready to optimize your data storage and reduce power costs? Schedule a meeting with Spectra Logic to learn more about integrating tape archives into your AI-driven data center strategy.

Guest Contributor

Matt Starr is the Field CTO and Director of Federal and Enterprise Sales, Spectra Logic